|

GeNN

4.9.0

GPU enhanced Neuronal Networks (GeNN)

|

|

GeNN

4.9.0

GPU enhanced Neuronal Networks (GeNN)

|

In this tutorial we will go through step by step instructions how to create and run your first PyGeNN simulation from scratch.

In this tutorial we will use a pre-defined Hodgkin-Huxley neuron model (NeuronModels::TraubMiles) and create a simulation consisting of ten such neurons without any synaptic connections. We will run this simulation on a GPU and plot the results using Matplotlib.

The first step is to create a new Python script. Create a new directory and, within that, create a new empty file called tenHH.py using your favourite text editor, e.g.

This Python script will contain the definition of the network model as well as the code to simulate the model and plot the results. First, we need to import some classes from PyGeNN so we can reference them succinctly. Type in your tenHH.py file:

Two standard elements to the model definition are setting the simulation step size, the name of the model and the precision to simulate with:

0.1 in the usual time units. The typical units in GeNN are ms, mV, nF, and μS. Therefore, this defines DT= 0.1 ms.Making the actual model definition makes use of the pygenn.GeNNModel.add_neuron_population and pygenn.GeNNModel.add_synapse_population member functions of the pygenn.GeNNModel object. The arguments to a call to pygenn.GeNNModel.add_neuron_population are:

pop_name: Unique name of the neuron population num_neurons: number of neurons in the population neuron: The type of neuron model. This should either be a string containing the name of a built in model or user-defined neuron type returned by pygenn.genn_model.create_custom_neuron_class (see Neuron models). param_space: Dictionary containing parameters of this neuron type var_space: Dictionary containing initial values or initialisation snippets for variables of this neuron typeWe first create the parameter and initial variable arrays,

Having defined the parameter values and initial values we can now create the neuron population,

This model definition will generate code for simulating ten Hodgkin-Huxley neurons on the a GPU or CPU. The next stage is to write the code that sets up the simulation, does the data handling for input and output and generally defines the numerical experiment to be run. To build your model description into simulation code, simply call pygenn.GeNNModel.build

If you have an NVIDIA GPU and CUDA_PATH is correctly configured, this will generate and build CUDA code to simulate your model. If not, it will generate and build C++ code. This completes the model definition in this example. The complete tenHH.py file now should look like this:

The generated code to simulate the model will now have been generated. To make use of this code, we need to load it into PyGeNN:

For the purposes of this tutorial we will initially simply run the model for 200ms and print the final neuron variables. To do so, we add:

and we need to copy the result back to the host (this will do nothing if you are running the model on a CPU) before printing it out,

pygenn.NeuronGroup.pull_state_from_device copies all relevant state variables of the neuron group from the GPU to the CPU main memory. We can then get direct access to the host-allocated memory using a 'view' and finally output the results to stdout by looping through all 10 neurons and outputting the state variables via their views.

This completes the first version of the script. The complete tenHH.py file should now look like

You can now execute your newly-built simulator with

The output you obtain should look like

This is not particularly interesting as we are just observing the final value of the membrane potentials. To see what is going on in the meantime, we need to copy intermediate values from the device into a data structure and plot them. This can be done in many ways but one sensible way of doing this is to replace the calls to pygenn.GeNNModel.step_time in tenHH.py with something like this:

You will also need to add:

to the top of tenHH.py.

Finally, if we add:

to the bottom of tenHH.py and:

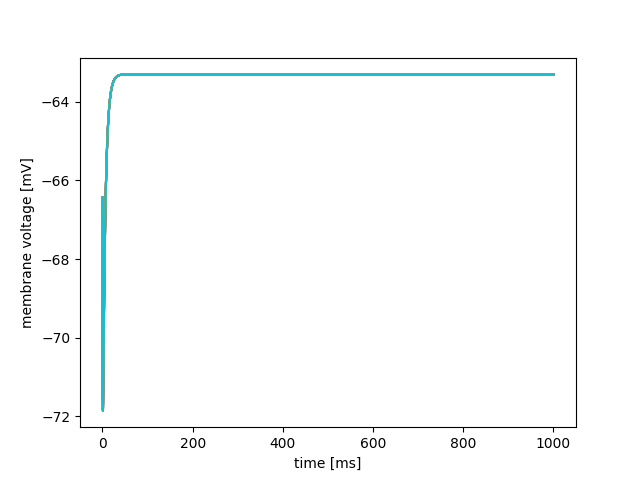

to the top, if you run the simulation again as described above you should observe dynamics like this:

However so far, the neurons are not connected and do not receive input. As the NeuronModels::TraubMiles model is silent in such conditions, the membrane voltages of the 10 neurons will simply drift from the -60mV they were initialised at to their resting potential.